Genbounty

Genbounty

Tool of the Day November 10, 2025

Tool of the Week November 15, 2025

Tool of the Month November 30, 2025

Tool of the Year December 31, 2025

Overview

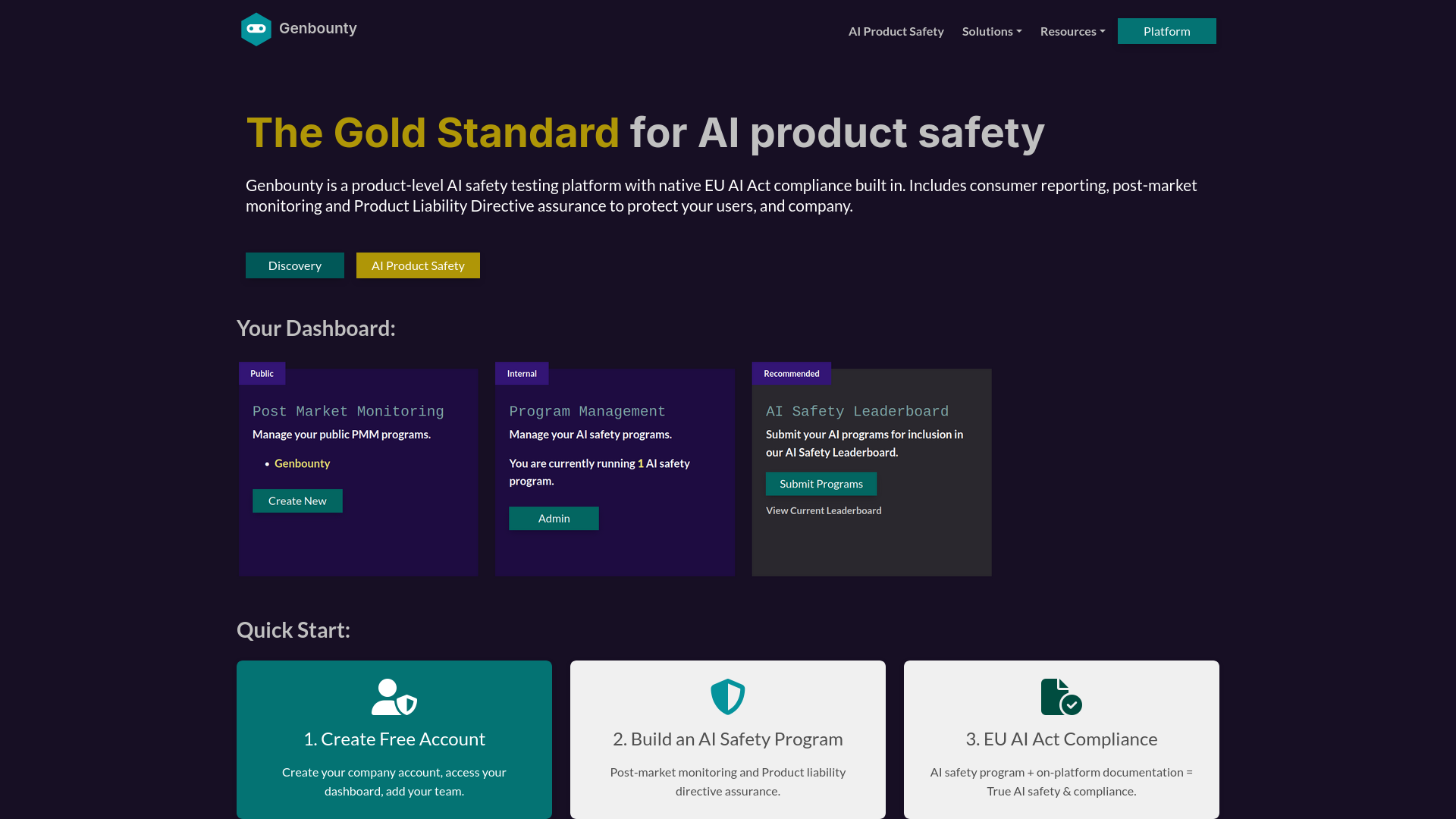

Genbounty is a comprehensive AI Product Safety Testing Platform with native EU AI Act compliance built in. Founded in London by Cindy Ogidi and Robert Morel, Genbounty delivers end-to-end AI product safety with a consumer safety first approach.About

Our testing: We operate on a structured, per-project engagement model where teams of approved AI safety researchers are paid per project to conduct professional safety testing within defined scopes, timelines, and deliverables. The platform integrates team-based researcher testing with compliance documentation, continuous post-market monitoring, and incident-ready workflows to ensure AI systems ship safely for consumers.Core Mission

"Find what your AI tries to hide, fix it fast, and keep you continuously compliant." Genbounty's mission is to bridge the gap between AI development and regulatory compliance by providing human-validated testing and automatic compliance documentation mapped to EU AI Act obligations.What Genbounty Is

Features

- ✅ AI Product Safety Testing Platform - Purpose-built for comprehensive AI product safety

- ✅ Per-Project Engagement Model - Structured projects with defined scopes, timelines, and compensation

- ✅ Professional AI Testing Service - Vetted research teams paid per project for validated findings

- ✅ EU AI Act Compliance Platform - Native compliance features integrated throughout

- ✅ Post-Market Monitoring Platform - Continuous safety monitoring and consumer reporting

Features

1. Human-Validated AI Safety Testing

- Team-Based Testing: Professional AI safety researchers work in teams to collaboratively test AI systems for misuse, unsafe behavior, and security risks

- Vetted AI Safety Teams: Teams of approved researchers with diverse skills and expertise collaborate on structured testing projects

- Human Verification: Every finding is verified by humans, never just automated scans - ensuring credible, regulator-trusted evidence

- Real-World Testing: Simulates actual user interactions and edge cases that traditional QA misses

- Collaborative Approach: Teams coordinate testing efforts, share findings, and ensure comprehensive coverage

2. Automatic Compliance Documentation

- EU AI Act Mapping: Each test, remediation, and mitigation is automatically logged in compliance-ready format

- Audit-Ready Reports: Generate structured EU AI Act reports showing due diligence, human oversight, and post-market monitoring

- Export Formats: PDF, JSON, and CSV exports for regulators, customers, and due diligence

3. Post-Market Monitoring (PMM)

- Consumer Reporting: Enable consumers to report critical AI safety issues directly to development teams

- Product Liability Directive Assurance: Provides strong legal protection through continuous monitoring

- Incident-Ready Workflows: Continuous monitoring captures oversight logs, findings, and remediation evidence

4. EU AI Act Compliance Integration

- Risk Classification: Map AI systems to EU AI Act risk levels (Unacceptable, High-risk, Limited, Minimal)

- Compliance Automation: Built-in compliance features eliminate manual documentation errors

- Regulatory Partnership: Work with trusted regulatory partners to achieve CE marking ready for market